DNS Forwarder Failures

A NAT Port Exhaustion Story

| Owner | Emile Hofsink |

| Genre | Epic Poetry 🌬️ |

Contents

- Haiku

- Preamble

- Part 1: NAT Port Allocation in GCP

- Part 2: Active Directory DNS, Forwarders and Root Hints

- Part 3: A (DNS) Resolution

- Part 4: The Questions That Haunt

- The End

Haiku

It’s always DNS… but sometimes it gets a helping hand

Preamble

On a brisk summers morn an issue was unearthed where certain DNS lookups for external web addresses would very occasionally fail (~1-2 failures per minute), whilst remaining stable for a bulk of the time.

Throughout a day of troubleshooting it was discovered that these

occasional lookup failures were to the DNS forwarders assigned to

server01 and server02 the Shared Services domain controllers/DNS

servers for the environment.

The eventual decided upon interim fix was to, instead, use Root Hints rather than forwarders. The reason as to why these work rather than forwarders is a story for further down.

To fully understand why this issue may have occurred and why it was not seen before we’ll need to:

- Understand the infrastructure in place

- Understand the flows that take place as part of a NAT assignment

- Understand some of the differences between DNS Forwarders and Root Hints

- Ask ourselves some questions

Part 1: NAT Port Allocation in GCP

Firstly a bit of information on how port allocation works generally for NAT Gateways in GCP:

Each NAT IP address on a Cloud NAT gateway offers 64,512 TCP source ports and 64,512 UDP source ports. TCP and UDP each support 65,536 ports per IP address, but Cloud NAT doesn’t use the first 1,024 well-known (privileged) ports.

When a Cloud NAT gateway performs source network address translation (SNAT) on a packet sent by a VM, it changes the packet’s NAT source IP address and source port.

The defaults are 64 ports allocated for the NAT tuple (a tuple containing 3 pieces of information that are used to make NAT decisions). This allows for ~1008 VMs (64512 ports per address / 64 ports per VM) for a particular Cloud NAT gateway.

However, this only allows for 64 ports to be used, which will be important for later.

A Typical Cloud NAT Flow

In this example, a VM with primary internal IP address 10.240.0.4,

without an external IP address, needs to download an update from the

external IP address 203.0.113.1. The configuration of nat-gw-us-east

gateway as follows:

- Minimum ports per instance:

64 - Manually assigned two NAT IP addresses:

192.0.2.50and192.0.2.60. - Provided NAT for the primary IP address range of

subnet-1.

Cloud NAT follows the port reservation procedure to reserve the

following NAT source IP address and source port tuples for each of the

VMs in the network. For example, the Cloud NAT gateway reserves 64

source ports for the VM with internal IP address 10.240.0.4. The NAT

IP address 192.0.2.50 has 64 unreserved ports, so the gateway reserves

the following set of 64 NAT source IP address and source port tuples for

that VM:

192.0.2.50:34000through192.0.2.50:34063

When the VM sends a packet to the update server 203.0.113.1 on

destination port 80, using the TCP protocol, the following occurs:

-

The VM sends a request packet with these attributes:

- Source IP address:

10.240.0.4, the primary internal IP address of the VM - Source port:

24000, the ephemeral source port chosen by the VM’s operating system - Destination address:

203.0.113.1, the update server’s external IP address - Destination port:

80, the destination port for HTTP traffic to the update server - Protocol:

TCP

- Source IP address:

-

The

nat-gw-us-eastgateway performs SNAT on egress, rewriting the request packet’s NAT source IP address and source port. The modified packet is sent to the internet if the VPC network has a route for the203.0.113.1destination whose next hop is the default internet gateway. A default route commonly meets this requirement.- NAT source IP address:

192.0.2.50, from one of the VM’s reserved NAT source IP address and source port tuples - Source port:

34022, an unused source port from one of the VM’s reserved source port tuples - Destination address:

203.0.113.1, unchanged - Destination port:

80, unchanged - Protocol:

TCP, unchanged

- NAT source IP address:

-

When the update server sends a response packet, that packet arrives on the

nat-gw-us-eastgateway with these attributes:- Source IP address:

203.0.113.1, the update server’s external IP address - Source port:

80, the HTTP response from the update server - Destination address:

192.0.2.50, matching the original NAT source IP address of the request packet - Destination port:

34022, matching the source port of the request packet - Protocol:

TCP, unchanged

- Source IP address:

-

The

nat-gw-us-eastgateway performs destination network address translation (DNAT) on the response packet, rewriting the response packet’s destination address and destination port so that the packet is delivered to the VM:- Source IP address:

203.0.113.1, unchanged - Source port:

80, unchanged - Destination address:

10.240.0.4, the primary internal IP address of the VM - Destination port:

24000, matching the original ephemeral source port of the request packet - Protocol:

TCP, unchanged

- Source IP address:

Voila, a successful request for a website on port 80 out to the internet behind a Cloud NAT gateway!

Part 2: Active Directory DNS, Forwarders and Root Hints

Great, we can make external connections out to the internet. They work, they’re successful - 100% of the time! Right?

Well, unfortunately there might be cases where they are dropped. To understand this we firstly need to know the differences between Forwarders and Root Hints:

A Root Hint And A Forwarder Walk Into A Bar

…and they get asked for directions to http://www.aap.com.au . How do both of these respond?

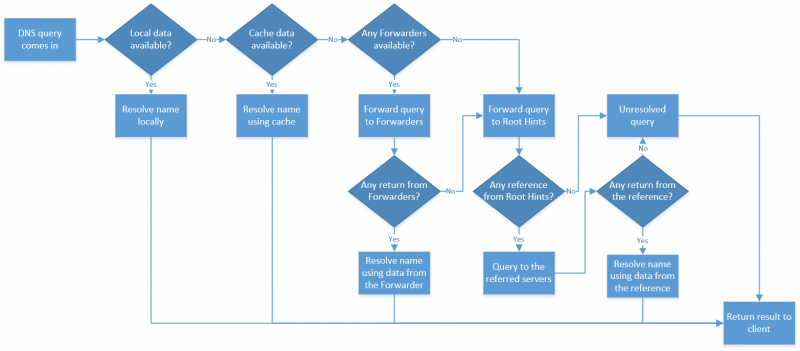

When we talk about DNS Forwarders and Root Hints, that means we’re talking about query forwarding. A forwarding only occurs in case where the DNS server cannot resolve a query by using its own data and local cache. Usually this happens when a query comes in for an external name that is outside the configured zones in a DNS server. Knowing how DNS name resolution works is the key of understanding DNS Forwarders and Root Hints in Windows DNS Server. The following flowchart depicts the sequence for DNS name resolution:

Source: https://www.mustbegeek.com/understanding-dns-forwarders-and-root-hints-in-windows-dns-server/

From the above flow a couple things are noted:

- Forwarders will iterate starting with the first in the list (e.g.

8.8.8.8fordns.goog) and look for a result. If there’s no response it’ll move onto the next forwarder in the list and so on and so forth. - Root Hints on the other hand give references to where a lookup can be performed and then let’s the DNS server query the reference server directly.

- Both of these work in a loop until there’s an exhaustion

In this flow it doesn’t account for DNS Forwarder failures, this is important because the seamless/failover flow is useful in so far as responses are returned, whether or not they contain an answer is left up the flow decision above.

Based on this we have one extremely critical clue to solve the mystery…

For a DNS forwarder, being able to communicate the first server in the list mulitple times is critical.

NAT Port Allocation for DNS Lookups

Ok, so now we know if we are using a forwarder to 8.8.8.8 we will be

communicating with it a fair amount to find information about a website.

Why could this be an issue though? We have working internet access and

that’s been proven out.

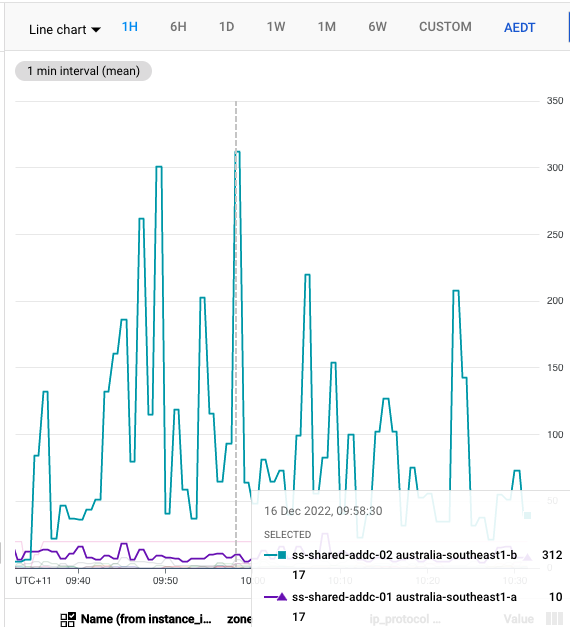

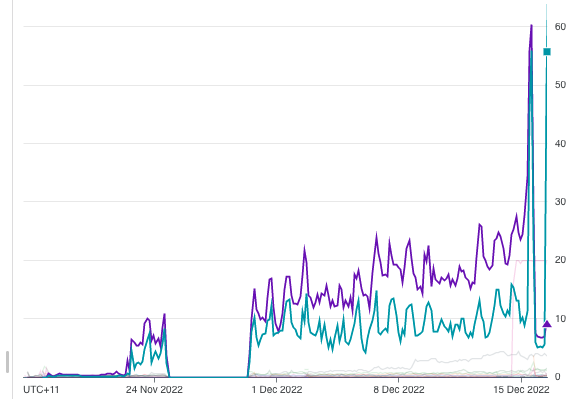

Let me present the below chart:

In the above we can see the amount of port usage between 2 different Active Directory boxes on .

Configuration for these boxes are as follows:

| Server | DNS Configuration |

ss-addc-01 | Root Hints |

ss-addc-02 | DNS Forwarders (to 8.8.8.8) |

So why the incredibly high port usage on ss-addc-02? Simply put, when

looking up an address we’re always going to ask 8.8.8.8 to give us

some information. What else do we know about our environment?

Well, when we are behind a Cloud NAT gateway every concurrent connection to the same Destination IP, on the same Port, using the same Protocol (the magic tuple), is allocated a new Dynamic Source Port.

We also know something else important… our Cloud NAT Gateway, by default only allows 64 dynamic ports to be allocated to a given VM.

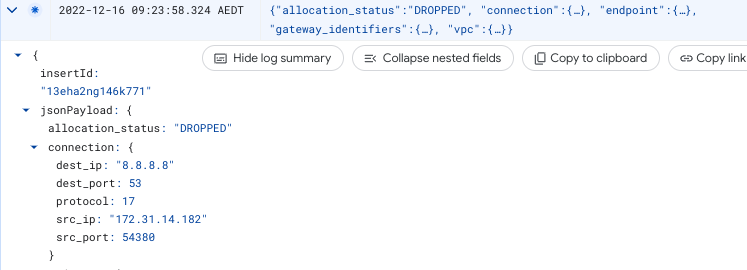

The End Of All DNS Lookups

So, what happens when we need >64 simultaneous lookups to 8.8.8.8?

💀 Cloud NAT will drop the connection 💀

This reveals the problem that we experience with very occasional drops in traffic for DNS lookups,

it explains why it’s only occasional,

and explains why it only happens on GCP.

🌏

We still have a few questions but we’ll leave them for the end. Let us continue to the resolution

Part 3: A (DNS) Resolution

So we know why we’re getting these occasional, but still equally annoying, drops in DNS resolution. How do we go about solving this, how do we get to the end of the tunnel?

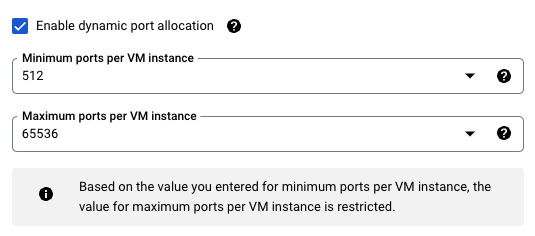

Cloud NAT offers a solution to this called Dynamic Port Allocation, which will allow you to set a “minimum” amount of ports but will dynamically adjust that number upwards if more ports are required

Based on what we know from the metrics and logs over the past 2 days we

know we’re going to need at least 300 dynamic ports available to us per

VM (ss-addc-02 has peaked at 300 connections since changing over). To

give us some breathing room I think 512 ports would be appropriate to

assign.

And as expected, after setting this no more dropouts are experienced when using DNS Forwarders.

☀️ A happy ever after ☀️

Part 4: The Questions That Haunt

So we have a solution, in place that is working nicely. But at the end of our journey we ask ourselves some final questions…

-

Why did this only start happening on the particular incident date ?

-

The sudden increase in traffic does correlate with the branch site traffic coming across to DNS in GCP.

-

The graphs for the logs and monitoring only show increased traffic since that day so prior to the cutover this amount of traffic was negligible.

-

-

How could branch sides add this much additional traffic for DNS?

-

This one is a bit unknown and puzzling to me for a couple of reasons:

-

Why does the cache not work properly, I cannot imagine its the TTL getting hit over and over?

-

Why would sites add more traffic than all these servers constantly performing lookups that have been successfully looking up information for the past month or so.

-

Why would lookups hold the connection for so long as to need additional source ports?

-

-

Potentially:

-

Some PCs, processes or otherwise are performing some strange lookups constantly to the web due to some software they have installed.

-

Perhaps due to the nature of web browsing needing to lookup sometimes 100s of different CDNs for data the DNS lookups are being particularly taxed by general web browsing.

-

-

-

Why forwarders rather than root hints?

-

The simple answer is security - you can lock down your AD servers to only contact particular external addresses.

-

The more complicated answer is performance as caching + looking up the first server immediately leads to reduced latency.

-

The End

We’ve found our problem, our solution, but continue to think on the questions we’ve gotten along the way. Perhaps some further investigation is required on the problems that continue to haunt us. They may yet still lead us onto another journey again…